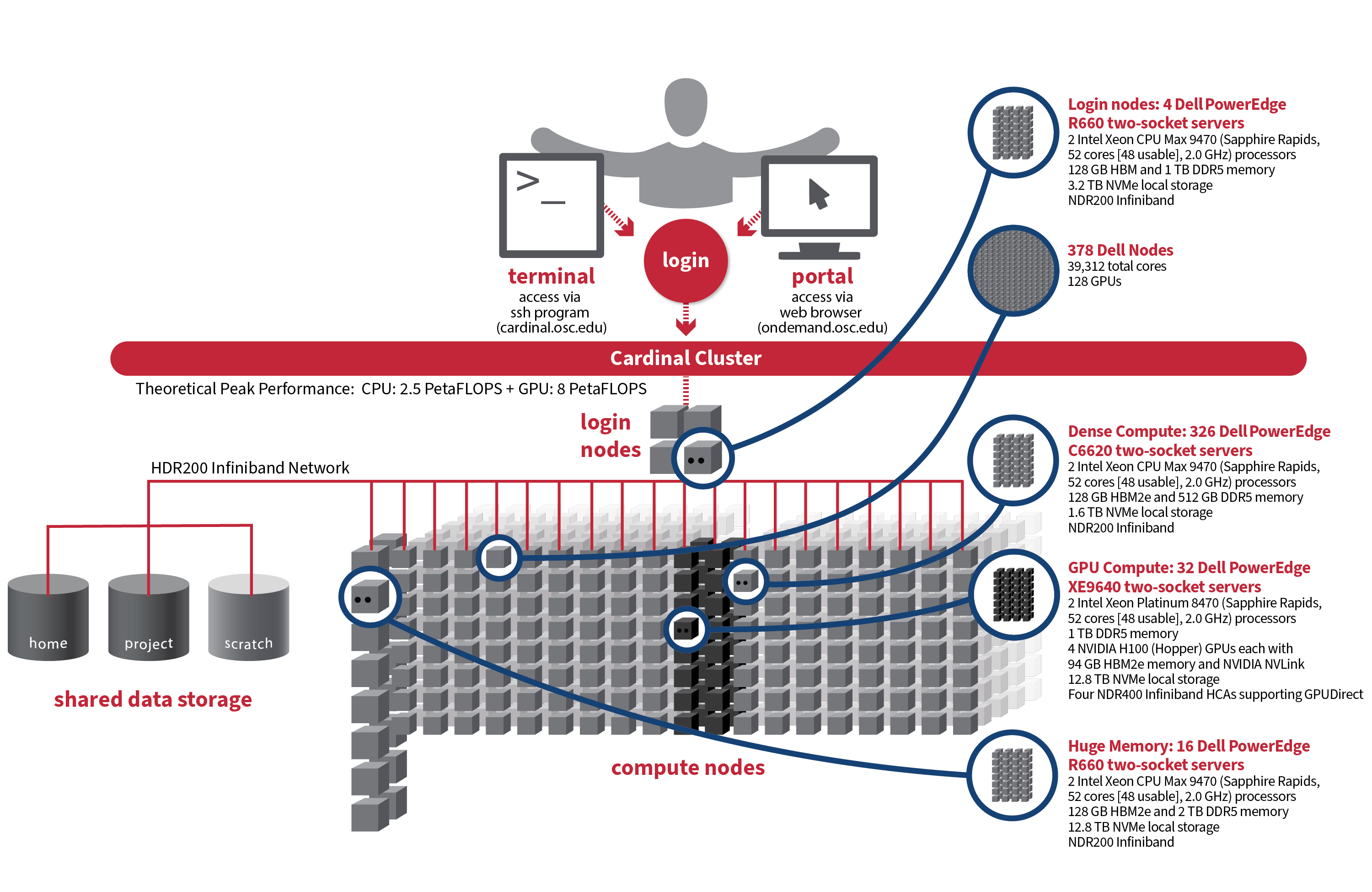

Detailed system specifications:

-

378 Dell Nodes, 39,312 total cores, 128 GPUs

-

Dense Compute: 326 Dell PowerEdge C6620 two-socket servers, each with:

-

2 Intel Xeon CPU Max 9470 (Sapphire Rapids, 52 cores [48 usable], 2.0 GHz) processors

-

128 GB HBM2e and 512 GB DDR5 memory

-

1.6 TB NVMe local storage

-

NDR200 Infiniband

-

-

GPU Compute: 32 Dell PowerEdge XE9640 two-socket servers, each with:

-

2 Intel Xeon Platinum 8470 (Sapphire Rapids, 52 cores [48 usable], 2.0 GHz) processors

-

1 TB DDR5 memory

-

4 NVIDIA H100 (Hopper) GPUs each with 94 GB HBM2e memory and NVIDIA NVLink

-

12.8 TB NVMe local storage

-

Four NDR400 Infiniband HCAs supporting GPUDirect

-

-

Analytics: 16 Dell PowerEdge R660 two-socket servers, each with:

-

2 Intel Xeon CPU Max 9470 (Sapphire Rapids, 52 cores [48 usable], 2.0 GHz) processors

-

128 GB HBM2e and 2 TB DDR5 memory

-

12.8 TB NVMe local storage

-

NDR200 Infiniband

-

-

Login nodes: 4 Dell PowerEdge R660 two-socket servers, each with:

-

2 Intel Xeon CPU Max 9470 (Sapphire Rapids, 52 cores [48 usable], 2.0 GHz) processors

-

128 GB HBM and 1 TB DDR5 memory

-

3.2 TB NVMe local storage

-

NDR200 Infiniband

-

IP address: 192.148.247.[182-185]

-

-

~10.5 PF Theoretical system peak performance

-

~8 PetaFLOPs (GPU)

-

~2.5 PetaFLOPS (CPU)

-

-

9 Physical racks, plus Two Coolant Distribution Units (CDUs) providing direct-to-the-chip liquid cooling for all nodes

How to Connect

-

SSH Method

To login to Cardinal cluster at OSC, ssh to the following hostname:

cardinal.osc.edu

You can either use an ssh client application or execute ssh on the command line in a terminal window as follows:

ssh <username>@cardinal.osc.edu

You may see a warning message including SSH key fingerprint. Verify that the fingerprint in the message matches one of the SSH key fingerprints listed here, then type yes.

From there, you are connected to the Cardinal login node and have access to the compilers and other software development tools. You can run programs interactively or through batch requests. We use control groups on login nodes to keep the login nodes stable. Please use batch jobs for any compute-intensive or memory-intensive work. See the following sections for details.

-

OnDemand Method

You can also login to Cardinal with our OnDemand tool. The first step is to log into ondemand.osc.edu. Once logged in you can access Cardinal by clicking on "Clusters", and then selecting ">_Cardinal Shell Access".

Instructions on how to use OnDemand can be found at the OnDemand documentation page.

File Systems

Cardinal accesses the same OSC mass storage environment as our other clusters. Therefore, users have the same home directory as on the old clusters. Full details of the storage environment are available in our storage environment guide.

Software Environment

The Cardinal cluster runs on Red Hat Enterprise Linux (RHEL) 9, which provides access to modern tools and libraries but may also require adjustments to your workflows. Please refer to the Cardinal Software Environment page for key software changes and available software.

Cardinal uses the same module system as the other clusters. You can keep up to on the software packages that have been made available on Cardinal by viewing the Software by System page and selecting the Cardinal system.

Programming Environment

The Cardinal cluster supports programming in C, C++, and Fortran. The available compiler suites include Intel, oneAPI, and GCC. Additionally, users have access to high-bandwidth memory (HBM), which is expected to enhance the performance of memory-bound applications. Please refer to the Cardinal Programming Environment page for details on compiler commands, parallel and GPU computing, and instructions on how to effectively utilize HBM.

Batch Specifics

The Cardinal cluster supports Slurm with the PBS compatibility layer being disabled. Refer to the documentation for our batch environment to understand how to use the batch system on OSC hardware. Refer to the Slurm migration page to understand how to use Slurm and the batch limit page about scheduling policy during the Program.