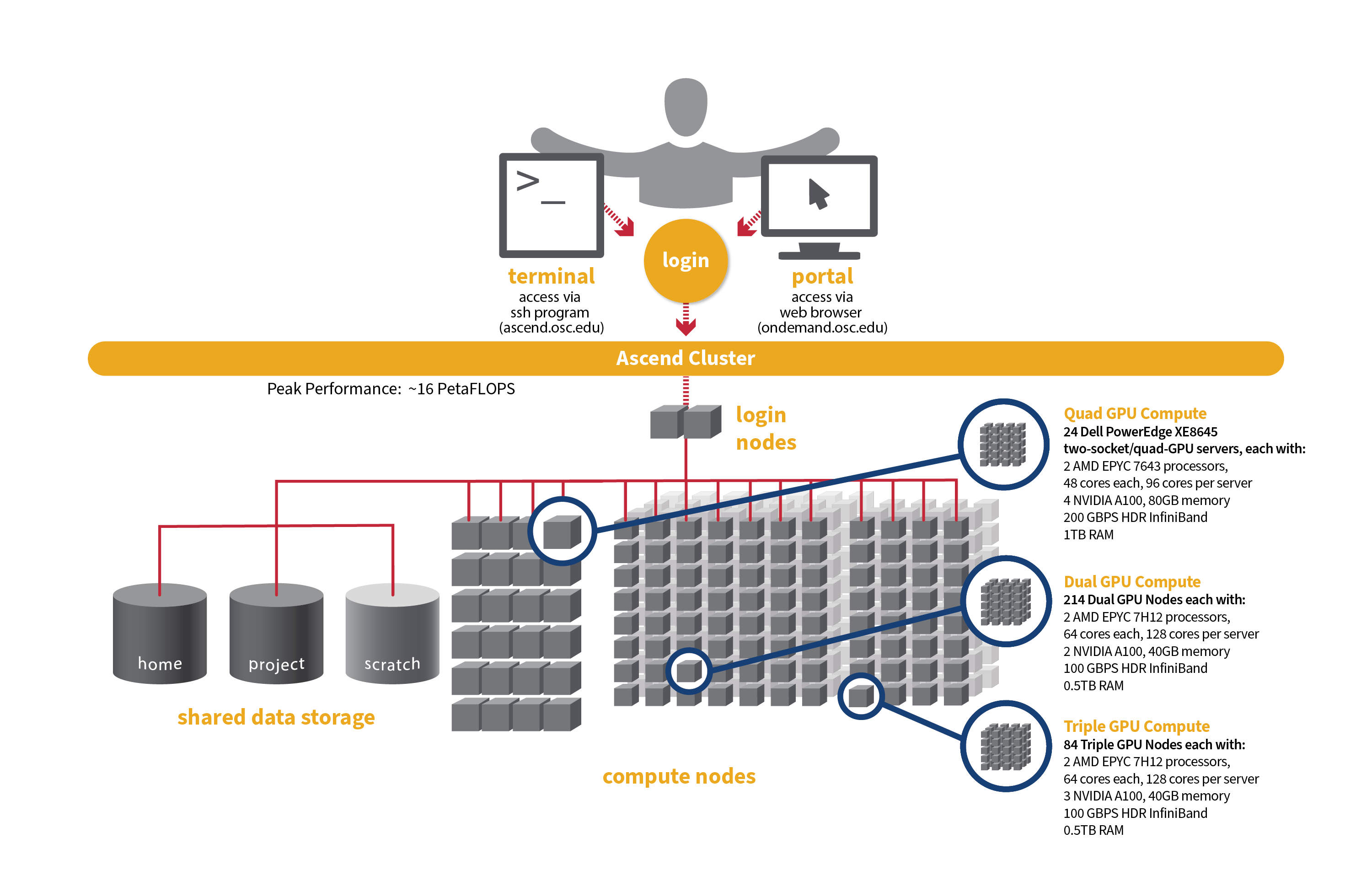

OSC's original Ascend cluster was installed in fall 2022 and is a Dell-built, AMD EPYC™ CPUs with NVIDIA A100 80GB GPUs cluster. In 2025, OSC expanded HPC resources on its Ascend cluster, which features additional 298 Dell R7525 server nodes with AMD EPYC 7H12 CPUs and NVIDIA A100 40GB GPUs.

Hardware

Detailed system specifications for Slurm workload:

- Quad GPU Compute: 24 Dell PowerEdge XE8645 two-socket/quad-GPU servers, each with:

- 2 AMD EPYC 7643 (Milan) processors (2.3 GHz, each with 44 usable cores)

- 4 NVIDIA A100 GPUs with 80GB memory each, connected by NVIDIA NVLink

- 921GB usable memory

- 12.8TB NVMe internal storage

- HDR200 Infiniband (200 Gbps)

- Dual GPU Compute: 190 Dell PowerEdge R7545 two-socket/dual GPU servers, each with:

- 2 AMD EPYC 7H12 processors (2.60 GHz, each with 60 usable cores)

- 2 NVIDIA A100 GPUs with 40GB memory each, PCIe, 250W

- 472GB usable Memory

- 1.92TB NVMe internal storage

- HDR100 Infiniband (100 Gbps)

- Triple GPU Compute: 84 Dell PowerEdge R7545 two-socket/dual GPU servers, each with:

- 2 AMD EPYC 7H12 processors (2.60 GHz, each with 60 usable cores)

- 3 NVIDIA A100 GPUs with 40GB memory each, PCIe, 250W (3rd GPU on each node is under testing and not available for user jobs)

- 472GB usable Memory

- 1.92TB NVMe internal storage

- HDR100 Infiniband (100 Gbps)

- Theoretical system peak performance

- ~16 PetaFLOPS

- 40,448 total cores and 776 GPUs (some cores and GPUs are reserved)

- 2 login nodes

- IP address: 192.148.247.[180-181]

How to Connect

-

SSH Method

To login to Ascend at OSC, ssh to the following hostname:

ascend.osc.edu

You can either use an ssh client application or execute ssh on the command line in a terminal window as follows:

ssh <username>@ascend.osc.edu

You may see a warning message including SSH key fingerprint. Verify that the fingerprint in the message matches one of the SSH key fingerprints listed here, then type yes.

From there, you are connected to the Ascend login node and have access to the compilers and other software development tools. You can run programs interactively or through batch requests. We use control groups on login nodes to keep the login nodes stable. Please use batch jobs for any compute-intensive or memory-intensive work. See the following sections for details.

-

OnDemand Method

You can also login to Ascend at OSC with our OnDemand tool. The first step is to log into OnDemand. Then once logged in you can access Ascend by clicking on "Clusters", and then selecting ">_Ascend Shell Access".

Instructions on how to connect to OnDemand can be found at the OnDemand documentation page.

File Systems

Ascend accesses the same OSC mass storage environment as our other clusters. Therefore, users have the same home directory as on the old clusters. Full details of the storage environment are available in our storage environment guide.

Software Environment

The Ascend cluster is now running on Red Hat Enterprise Linux (RHEL) 9, introducing several software-related changes compared to the RHEL 7/8 environment. These updates provide access to modern tools and libraries but may also require adjustments to your workflows. You can stay updated on the software packages available on Ascend by viewing Available software list on Next Gen Ascend.

Key change

A key change is that you are now required to specify the module version when loading any modules. For example, instead of using module load intel, you must use module load intel/2021.10.0. Failure to specify the version will result in an error message.

Below is an example message when loading gcc without specifying the version:

$ module load gcc Lmod has detected the following error: These module(s) or extension(s) exist but cannot be loaded as requested: "gcc". You encountered this error for one of the following reasons: 1. Missing version specification: On Ascend, you must specify an available version. 2. Missing required modules: Ensure you have loaded the appropriate compiler and MPI modules. Try: "module spider gcc" to view available versions or required modules. If you need further assistance, please contact oschelp@osc.edu with the subject line "lmod error: gcc"

Batch Specifics

Refer to this Slurm migration page to understand how to use Slurm on the Ascend cluster.

Using OSC Resources

For more information about how to use OSC resources, please see our guide on batch processing at OSC. For specific information about modules and file storage, please see the Batch Execution Environment page.