AlphaFold 2.1.0 now available on Ascend

AlphaFold 2.1.0 is now available on Ascend via module load alphafold/2.1.0

AlphaFold 2.1.0 is now available on Ascend via module load alphafold/2.1.0

AlphaFold 2.1.2 is now available on Ascend via module load alphafold/2.1.2

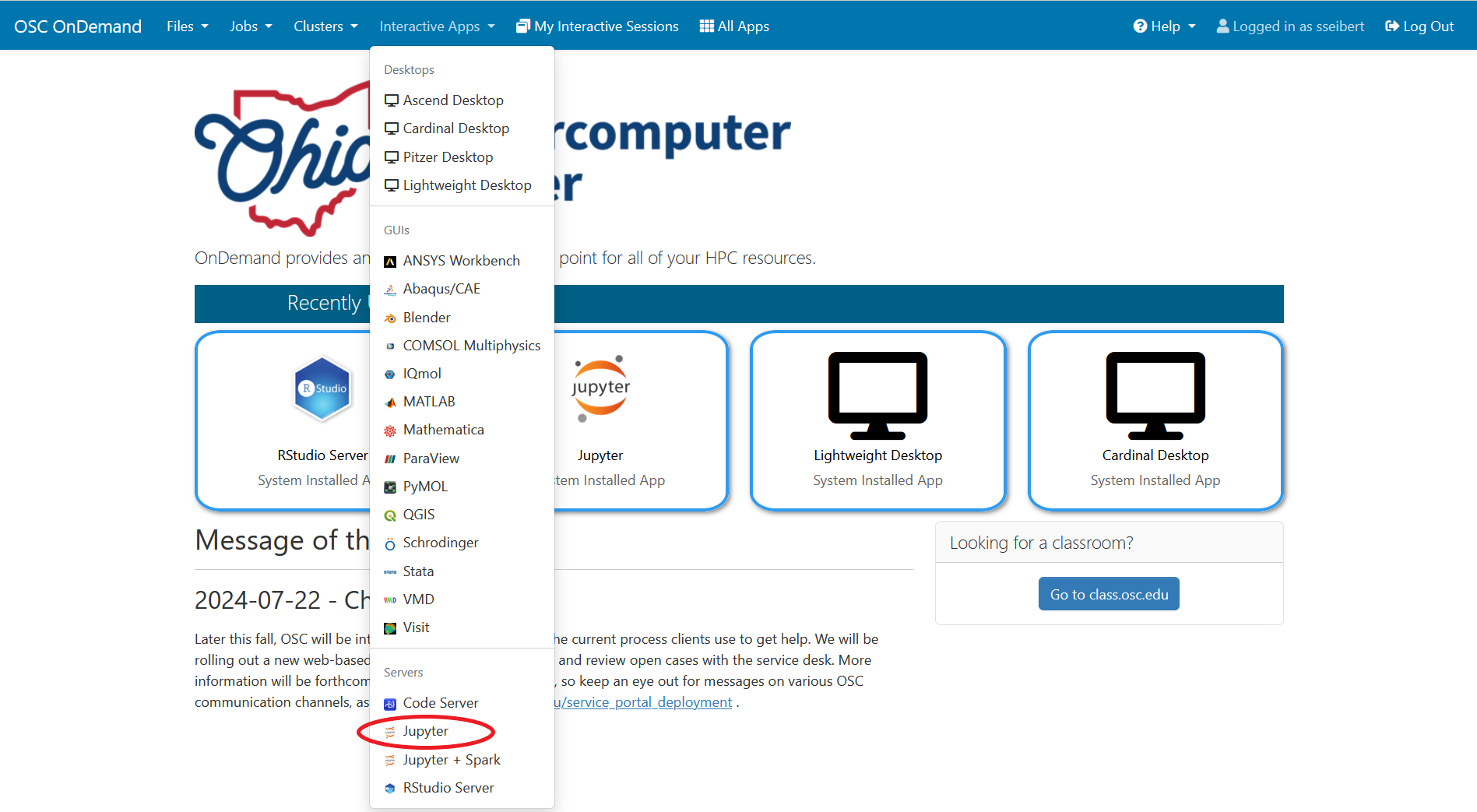

This page outlines how to use the Jupyter interactive app on OnDemand.

Log on to https://ondemand.osc.edu/ with your OSC credentials. Choose Jupyter under the InteractiveApps option.

Rosetta is a software suite that includes algorithms for computational modeling and analysis of protein structures. It has enabled notable scientific advances in computational biology, including de novo protein design, enzyme design, ligand docking, and structure prediction of biological macromolecules and macromolecular complexes.

A PyTorch 2.4.0 conda environment is now available. Usage via: module load pytorch/2.4.0 See OSC PyTorch software page for more details.

MVAPICH is a standard library for performing parallel processing using a distributed-memory model.

Julia 1.10.4 is now available on Ascend. Use module load julia/1.10.4