OSU grad student explores strengths, challenges of Cray, IBM, NVIDIA

Columbus, Ohio (March 28, 2012) – Surveying the wide range of parallel system architectures offered in the supercomputer market, an Ohio State University researcher recently sought to establish some side-by-side performance comparisons.

|

| Saniyah Bokhari |

The journal, Concurrency and Computation: Practice and Experience, in February published, "Parallel solution of the subset-sum problem: an empirical study." The paper is based upon a master's thesis written last year by computer science and engineering graduate student Saniyah Bokhari.

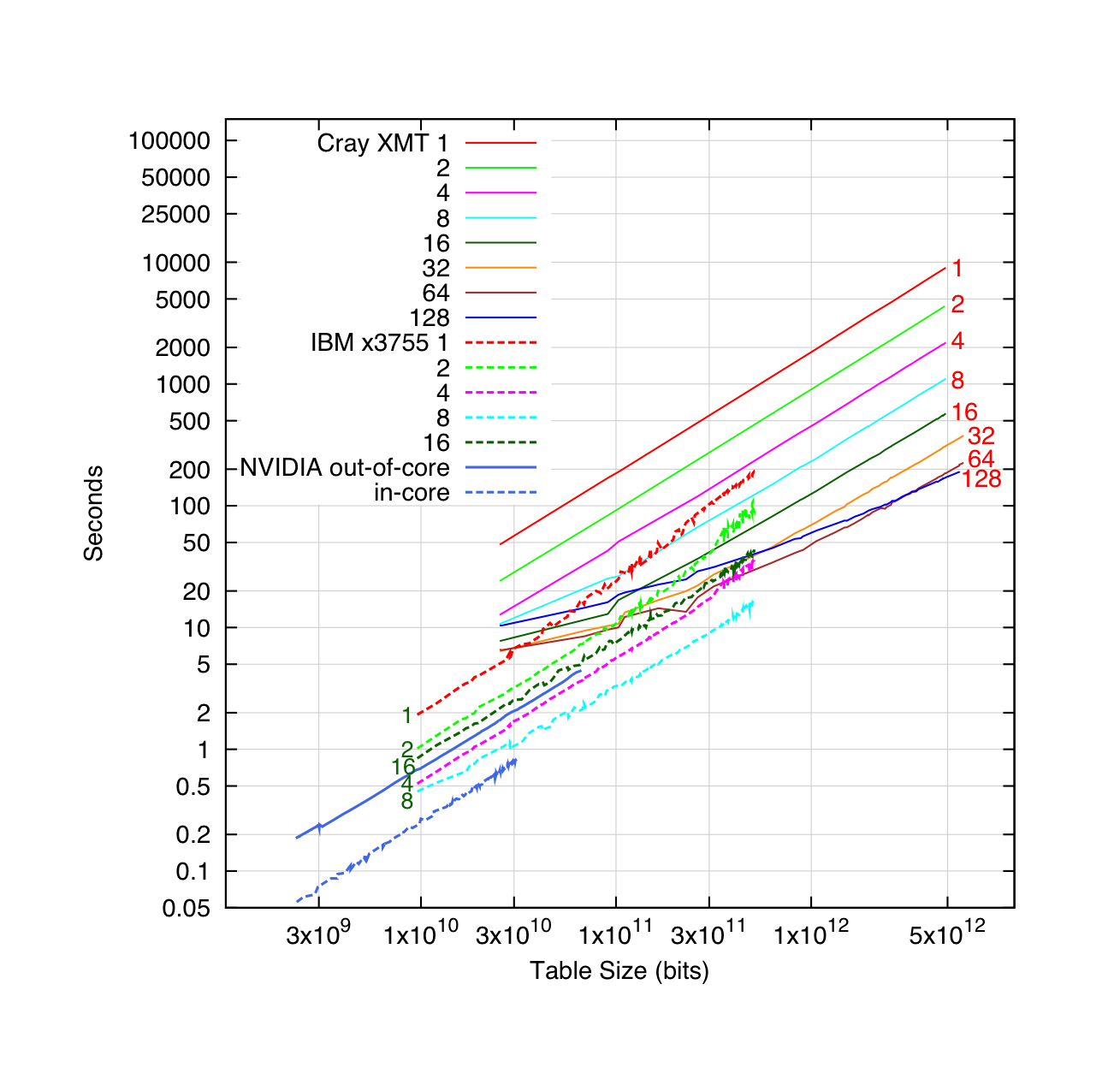

“We explore the parallelization of the subset-sum problem on three contemporary but very different architectures, a 128-processor Cray massively multithreaded machine, a 16-processor IBM shared memory machine, and a 240-core NVIDIA graphics processing unit,” said Bokhari. “These experiments highlighted the strengths and weaknesses of these architectures in the context of a well-defined combinatorial problem.”

Bokhari evaluated the conventional central processing unit architecture of the IBM 1350 Glenn Cluster at the Ohio Supercomputer Center (OSC) and the less-traditional general-purpose graphic processing unit (GPGPU) architecture, available on the same cluster. She also evaluated the multithreaded architecture of a Cray Extreme Multithreading (XMT) supercomputer at the Pacific Northwest National Laboratory’s (PNNL) Center for Adaptive Supercomputing Software.

"Ms. Bokhari's work provides valuable insights into matching the best high performance computing architecture with the computational needs of a given research community," noted Ashok Krishnamurthy, interim co-executive director of OSC. "These systems are continually evolving to incorporate new technologies, such as GPUs, in order to achieve new, higher performance measures, and we must understand exactly what each new innovation offers."

Each of the architectures Bokhari tested fall in the area of parallel computing, where multiple processors are used to tackle pieces of complex problems “in parallel.” The subset-sum problem she used for her study is an algorithm with known solutions that is solvable in a period of time that is proportional to the number of objects entered, multiplied by the sum of their sizes. Also, she carefully timed the code runs for solving a comprehensive range of problem sizes.

Bokhari concluded that the GPU performs well for problems whose tables fit within the limitations of the device memory. Because GPUs typically have memory sizes in the range of 10 gigabytes (GB), such architectures are best for small problems that have table sizes of approximately thirty billion bits.

She found that the IBM x3755 performed very well on medium-sized problems that fit within its 64-GB memory, but had poor scalability as the number of processors increased and was unable to sustain its performance as the problem size increased. The machine tended to saturate for problem with table sizes of 300 billion bits.

The Cray XMT showed very good scaling for large problems and demonstrated sustained performance as the problem size increased, she said. However, the Cray had poor scaling for small problem sizes, performing best with table sizes of a trillion bits or more.

“In conclusion, we can state that the NVIDIA GPGPU is best suited to small problem sizes; the IBM x3755 performs well for medium sizes, and the Cray XMT is the clear choice for large problems,” Bokhari said. “For the XMT, we expect to see better performance for large problem sizes, should memory larger than 1 TB become available.”

The Ohio Supercomputer Center (OSC), a member of the Ohio Technology Consortium of the Ohio Board of Regents, addresses the rising computational demands of academic and industrial research communities by providing a robust shared infrastructure and proven expertise in advanced modeling, simulation and analysis. OSC empowers scientists with the vital resources essential to make extraordinary discoveries and innovations, partners with businesses and industry to leverage computational science as a competitive force in the global knowledge economy, and leads efforts to equip the workforce with the key technology skills required to secure 21st century jobs. For more, visit www.osc.edu.

The Ohio State University Department of Computer Science and Engineering will impact the information age as a national leader in computing research and education. We will prepare computing graduates who are highly sought after, productive, and well-respected for their work, and who contribute to new developments in computing. We will give students in other disciplines an appropriate foundation in computing for their education, research, and experiences after graduation, consistent with computing's increasingly fundamental role in society. In our areas of research focus, we will contribute key ideas to the development of the computing basis of the information age, advancing the state of the art for the benefit of society, the State of Ohio, and The Ohio State University. We will work with key academic partners within and outside of OSU, and with key industrial partners, in pursuit of our research and educational endeavors. For more, visit http://www.cse.ohio-state.edu.