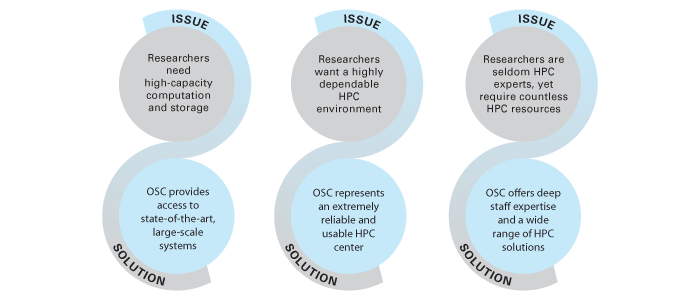

CAPACITY | RELIABILITY | CUSTOMIZATION

Since the center’s creation in 1987, the Ohio Supercomputer Center (OSC) has worked to propel Ohio’s economy, from academic discoveries to industrial innovation. The Center provides researchers with high-end supercomputing and storage, domain-specific programming expertise and middle school-to-college-to-workforce education and training. Our mission is to empower our clients, partner strategically to develop new research and business opportunities, and lead Ohio’s knowledge economy. David Hudak, Ph.D., director of supercomputing operations, offers some insights on how the Center is supporting that mission.

Capacity

We want to work with researchers who need computational and storage capacities that far outstrip what they can reasonably expect from the machines in their offices. There are all kinds of benefits in having access to a state-of-the-art, modern computer center that deploys large-scale system or systems that are professionally maintained and monitored. The goal here is for researchers to be able to focus on their science and not on whether they’ve installed the latest software or what happens when their computer’s power cord gets unplugged. We also don’t want researchers to have to look elsewhere just because they think we lack capacity. If we currently do lack the capacity to meet their needs, we have the ability to add capacity.

Reliability

We’ve built an extremely reliable and exceedingly usable high performance computing environment here at OSC. We have a production software stack that is meticulously developed and maintained by a dozen experts. We’re focused on issues like security patches, performance improvements, latest versions, improved usability; there’s a bunch of work that’s gone into making our production environment as good as it is.

Customization

We offer clients domain science expertise in computational science, as well as in finite element analysis and computational fluid dynamics. Our clients are scientists with deep levels of expertise in their own fields of study – chemistry, physics, biology, pharmacology and so on, not HPC engineering. On top of that, our practitioners, more often than not, are graduate students who work for the scientists. They use our systems for about a year and a half – not a long learning curve. We’re making our experts more available so they can provide customized solutions that bring scientific work into our production environment and to help clients get the most they can out of our systems.

There’s also hardware customization, as we’re always looking for the right balance between specialization and mass production. For example, we offer a few big memory machines, one-terabyte ram nodes for those experiments and those codes that simply require a terabyte of RAM that you just can’t split up across multiple nodes. Also, we’ll have Kepler GPU accelerators in the new Ruby Cluster to compliment the existing Tesla GPUs we deployed in the Oakley Cluster. And, we’ll be deploying Intel Xeon Phi coprocessors in Ruby as well. If we don’t have some particular feature that a researcher needs, I’d love to have a conversation to better understand that need and to find out if others across the state have the same need.

Partnerships

We’re always looking to build deep research partnerships. We want to identify research communities that need common computational research support, for example, in analytics. For instance, if several analytics research centers are built up at Ohio State, then we stand up production environments and hire experts in support of the work that they’re trying to do, rather than have them replicate that in each lab. We want to expand our staff in a strategic way to address client needs in support of our mission.

Access

A researcher’s most valuable resource is their time. OSC OnDemand was our first large-scale initiative at the production level aimed at making HPC easier to use; we are trying to redesign and simplify the interfaces with our computing systems. With MyOSC, we’re making that the single location for managing administrative functions. This way, if you need to work on a project, you go to OSC OnDemand; if you’ve got a report to file or a ticket to resolve or software or training classes to access, you’ll go to MyOSC.

Reorganization

Over the last year, we’ve pretty much reorganized the entire supercomputer operations staff. The overall goal was to scale and sustain operations and improve the reliability and service we provide to our clients. We now have a scope and mission for each of four production teams: HPC systems (Doug Johnson), HPC Client Services (Brian Guilfoos), Scientific Applications (Karen Tomko) and Web & Interface Applications (Basil Gohar). These four groups have now come together to more easily devise a “complete solution” to address a client’s needs.

Case study: Move the work to where the data is.

Hudak: In order to do the work that will be required by the new Honda Simulation Innovation and Modeling Center at Ohio State, the basic, scalable large computing is going to run at OSC. In order for the researchers to be productive, our systems team has installed and integrated eight Windows machines into the production environment at our data center.

Researchers will be able to log in and do their preprocessing using the Windows applications that they already know and can use, then log into Oakley and submit the jobs to do the massive simulations, and, finally, log back into the Windows boxes to do the post-processing and visualizations. It was important that we “move the work to where the data is.” These Windows machines, sitting within our production environment, can touch all of our storage systems, and all of the machines touch the same data – that’s the model for today and into the future. Now, we’re doing the same thing with one of our engineering service providers in the AweSim program – you open up a mesh and specify some weld passes, generate some data files, feed them into an FAE solver that will run on 30 or 40 nodes to generate a few dozen terabytes of simulation data, and then run that through another thermal processor. If you can have all that stuff happen and don’t have to move the data around, it delivers a much, much faster time-to-solution.

Production Capacity

- 82+ million CPU core-hours delivered

- Over 3.3 million computational jobs run

- 835 TB data storage space in use

- 98% uptime (target: 96% cumulative uptime)

Client Services

- 24 universities served around the state

- 194 new projects awarded to Ohio faculty

- 948 individuals ran a computing simulation or analysis

- 330+ individuals attended 18 training opportunities